DeepSeek and the Impact of Cheaper Models

Reaction from January 2025 via Q4 2024 Investor Letter (posted late)

[Author Note: This is from January 2024 and was included in the Logos investor update for Q4 2024. I share it here as an external memory bank. It will be important to understand where I was wrong and to prevent false positives.]

A brief addition to the quarterly (Q4 2024) update as the DeepSeek news makes waves across venture capital and public markets.

The headline-grabbing DeepSeek R1 model is a stark example of an existing trend in which the cost of AI models is declining rapidly—with demonstrated efficiency gains across inference and training. This has implications for the application and infrastructure layers of the AI technology stack, the core elements of Logos’ investment focus.

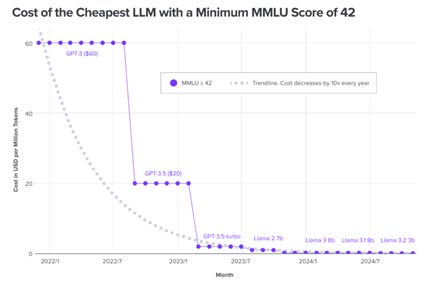

Inference: The DeepSeek R1 model is 50%-85% more efficient than the most comparable alternative (OpenAI's o1)1. This magnitude of efficiency gain is not new. R1 and other model advancements continue to reduce the marginal costs of AI-powered applications. In the last three years, we have witnessed cost declines from tens of dollars to single digits cents (Exhibit 1).

Exhibit 1: Cost of Inference (2022-2024)2

Training: DeepSeek R1 was (supposedly) trained for $6M3[3], one to two orders of magnitude cheaper than previous state-of-the-art models (e.g., OpenAI’s GPT-4 at >$100M4 and GPT-5 rumored to be 500M+5). However, this point is highly debated with new information pointing to significantly more capex at DeepSeek’s disposal6. While DeepSeek's methodology—which likely includes both real innovation and "borrowed" data from OpenAI—is a topic of interest, it does not directly impact Logos' investment focus. However, we are happy to discuss technical innovations further if relevant to you.

Takeaways

Jevons' Paradox states that when technological advancements drive more efficient use of a resource (such as chips or energy), the demand for and total consumption of that resource increases. In contrast to the underlying sentiment that destroyed $1T+ of market capitalization7 on Monday, January 27, demand for cloud GPU compute will be massive, necessitating significant increases in power availability and data center capacity.

The conversation on the cost to train DeepSeek R1 is noise in the analysis of the infrastructure needs and the corresponding demands for power and compute. We have always believed that the demand for compute from inference would be orders of magnitude greater than that from training, and we maintain that most inference will occur in cloud data centers due to the memory constraints of edge devices (e.g., laptops, phones) and the networking advantages of data centers.

As we stated during the Fund I fundraise, we believe AI models are becoming commoditized, with open-source driving zero-cost availability. DeepSeek further accelerates this trend, potentially reducing some of the capital barriers that was believed to limit the competitive set. At a minimum, there is another capable AI research lab generating bleeding edge AI models and presenting them as open source.

The application layer stands to benefit most from state-of-the-art models that are freely available and increasingly performant. We are in the early stages of a long-term trend, where improvements in model performance (quality and cost) unlock new AI use cases and propel demand far beyond what is currently observed or even imagined. The opportunity to build applications that harness cheap, ubiquitous intelligence and the need for the infrastructure that powers them has never been greater.

DeepSeek R1 Technical Report.